Analyzing the history of CVPR

As the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 2025 approaches, let’s take a look at the history of the conference and its workshops from 2017 to 2024. The goal of this analysis is to provide insights into the evolution of topics and trends in artificial intelligence research over the years. Keep in mind that this information should be taken with a grain of salt, as some of the information that may be relevant to the analyses is discarded during the cleaning process. Some of the analysis is based on keywords, and we make some assumptions about how authors use keywords (e.g. it’s pretty unlikely that a paper about image data would have the keyword audio in its title or abstract), but this is not a perfect solution. The goal of this post is to give some insight into the history of the conference, not to be a definitive analysis.

Note that some of the graphs use percentiles of the total number of papers published in each year. Since there are different numbers of papers published each year, you can’t really compare the numbers from one year to the next. The goal of these graphs is to show the distribution of papers published during the period and any changes in the focus of the academic community. You can also interact with the visualizations here. You can zoom in on specific parts, enable or disable lines by clicking on their names in the legend, and hover over the points to see more information.

Overall Statistics

Here you can see the number of published papers. Each year, there are more and more papers published compared to the previous year, except for 2023. There were more than three times as many papers published in 2024 as in 2017.

{"data": [{"hovertemplate": "year=%{x}<br>papers=%{y}<extra></extra>", "legendgroup": "", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "", "orientation": "v", "showlegend": false, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "i2", "bdata": "KAQuBVQHwQd+CEgKNgmgDQ=="}, "yaxis": "y", "type": "scatter"}], "layout": {"xaxis": {"anchor": "y", "domain": [0.0, 1.0], "title": {"text": "year"}}, "yaxis": {"anchor": "x", "domain": [0.0, 1.0], "title": {"text": "papers"}}, "legend": {"tracegroupgap": 0}}}

Regarding the modalities used in the papers, we can see that image is still the most common, but the use of text and multiple modalities has increased significantly. The application of optical flow, graphs and depth information has decreased in the last years, while the use of particles has remained relatively stable.

{"data": [{"customdata": [["audio"], ["audio"], ["audio"], ["audio"], ["audio"], ["audio"], ["audio"], ["audio"]], "hovertemplate": "modality=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "audio", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "audio", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "2FBeQ3kN5T8dk/Uck/XsP30vhdy8DuE/g0lGn20u6D/N5tSIhffrP/WdjfrORvU/WASE4EIk+z+P78JB9PgAQA=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["depth"], ["depth"], ["depth"], ["depth"], ["depth"], ["depth"], ["depth"], ["depth"]], "hovertemplate": "modality=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "depth", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "depth", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "zB4we8DsE0Ak1egj1egTQER0/3wvhRhAQj1lr7AmFEDHDwNNB98TQMTkCmJyBRFAzGK7c/F4EUDBpv1kCWwPQA=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["graph"], ["graph"], ["graph"], ["graph"], ["graph"], ["graph"], ["graph"], ["graph"]], "hovertemplate": "modality=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "graph", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "graph", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "HX1z9M3RC0A+eSo+eSoOQHumE2xSRRFAg0lGn20uGEDucuibFmAQQL+zUd/ZqAtALkSykbMfCkCWwKb9ZAkDQA=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["image"], ["image"], ["image"], ["image"], ["image"], ["image"], ["image"], ["image"]], "hovertemplate": "modality=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "image", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "image", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "eQ3lNZQXQkBUyixUyixCQAEGofVGhkBAMA4SdHz7PkB3eggk2Ro8QPeySqCiNz1AeiSI92T9O0B8Fw6ixydAQA=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["mesh"], ["mesh"], ["mesh"], ["mesh"], ["mesh"], ["mesh"], ["mesh"], ["mesh"]], "hovertemplate": "modality=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "mesh", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "mesh", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "9oDZA2YP6D/bxovaxovqP0kPVM5u4fc/kOUMnMb8+D9dS0BRmUsBQCZXEJMriPk/XoOZB+cIBUCUJbBpP1kCQA=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["multi modal"], ["multi modal"], ["multi modal"], ["multi modal"], ["multi modal"], ["multi modal"], ["multi modal"], ["multi modal"]], "hovertemplate": "modality=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "multi modal", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "multi modal", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "FbFUxFIRC0A1SIM0SIMEQFJF/XDtmQZAw1Unjyo2DEDlDfuqVnANQCdeT8ocAxhA1CNBvCfrF0DtJ0tg034jQA=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["optical flow"], ["optical flow"], ["optical flow"], ["optical flow"], ["optical flow"], ["optical flow"], ["optical flow"], ["optical flow"]], "hovertemplate": "modality=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "optical flow", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "optical flow", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "yLgg44KMA0A+eSo+eSr+Pw7LXP52jv8/98VBgo5v/z+EcWIiEo33P1osjwhNtfc/JoMsSX2t8z8Vc6szUjHvPw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["particle"], ["particle"], ["particle"], ["particle"], ["particle"], ["particle"], ["particle"], ["particle"]], "hovertemplate": "modality=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "particle", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "particle", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "9oDZA2YPyD8TYk4TYk6zPy5/O8fHSrs/AjGEv/Me0D8j1cmZzanRPylzDHDwc9M/mwIc7fRIwD84iB7fhYPQPw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["path"], ["path"], ["path"], ["path"], ["path"], ["path"], ["path"], ["path"]], "hovertemplate": "modality=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "path", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "path", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "NOHPhD8T7j+Y+iGY+iH4P4RTS55mNABAg0lGn20u+D+cmIhE4wX5P1osjwhNtfc/QgNjKDJb9D9GKuZWZ6T0Pw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["point cloud"], ["point cloud"], ["point cloud"], ["point cloud"], ["point cloud"], ["point cloud"], ["point cloud"], ["point cloud"]], "hovertemplate": "modality=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "point cloud", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "point cloud", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "FbFUxFIR6z8D77MC77MCQFlpwzKXvwVAw1Unjyo2DED9NCHNJ+kOQCVQ0Vs6DQtAA2MoMlvUEkCpmFudkYoIQA=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["text"], ["text"], ["text"], ["text"], ["text"], ["text"], ["text"], ["text"]], "hovertemplate": "modality=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "text", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "text", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "9oDZA2YPGEB3FO12FO0WQD/ZqivwKBlAU4Bd658oFUBUIxbeb5sUQChljgEOfhZAQgNjKDJbFEAJbNpPlsAbQA=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["video"], ["video"], ["video"], ["video"], ["video"], ["video"], ["video"], ["video"]], "hovertemplate": "modality=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "video", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "video", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "NpTXUF5DLkCE5g2E5g0sQMnTjAYkxidAjT7bXDDJKEDsq1rBdfQqQL+zUd/ZqCtANeR/ySBLKkDYtJ8sgc0pQA=="}, "yaxis": "y", "type": "scatter"}], "layout": {"legend": {"title": {"text": "modality"}, "tracegroupgap": 0}, "xaxis": {"anchor": "y", "domain": [0.0, 1.0], "title": {"text": "year"}}, "yaxis": {"anchor": "x", "domain": [0.0, 1.0], "title": {"text": "occurrences (%)"}}}}

It is quite common for papers to introduce new concepts, be it a new method, a new dataset, or a new architecture. The following graph shows the most common concepts introduced in the papers. Not surprisingly, algorithms are the most common concept. Algorithms also involve new methods or approaches. Novel tasks have also been introduced over the years, which is highly correlated with the creation of novel datasets. The introduction of new architectures has also increased in the last year, including new models, modules, and networks. The creation of different losses and metrics has been quite stable over the years, with very few papers introducing new ones.

{"data": [{"customdata": [["algorithms"], ["algorithms"], ["algorithms"], ["algorithms"], ["algorithms"], ["algorithms"], ["algorithms"], ["algorithms"]], "hovertemplate": "concept=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "algorithms", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "algorithms", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "qYilIpaKAEA+eSo+eSr+P1x7phNsUgVA3Y20iNzS/T9UIxbeb5v0P1osjwhNtfc/GoUB+zTk/z+P78JB9PgQQA=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["architectures"], ["architectures"], ["architectures"], ["architectures"], ["architectures"], ["architectures"], ["architectures"], ["architectures"]], "hovertemplate": "concept=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "architectures", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "architectures", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "2FBeQ3kN5T9fX19fX1/vP4RTS55mNPA/0PHti4ME7T+XwbYIZe3wPylzDHDwc/M//gTLG4A27z8H0eO7cBD7Pw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["datasets"], ["datasets"], ["datasets"], ["datasets"], ["datasets"], ["datasets"], ["datasets"], ["datasets"]], "hovertemplate": "concept=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "datasets", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "datasets", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "5+ibo2+O9j/8rMD7rMD7PyE3r0N0//w/g0lGn20u+D87/O+7niLzP4rXkzLHAP8/44SUPMuI/j9s2k+WwKb/Pw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["losses"], ["losses"], ["losses"], ["losses"], ["losses"], ["losses"], ["losses"], ["losses"]], "hovertemplate": "concept=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "losses", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "losses", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "9oDZA2YPuD8dk/Uck/XcP30vhdy8DtE/aRG5pbuR1j+1v65mtH7aP76sEqjoLc0/WASE4EIkyz9LYNN+sgTGPw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["metrics"], ["metrics"], ["metrics"], ["metrics"], ["metrics"], ["metrics"], ["metrics"], ["metrics"]], "hovertemplate": "concept=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "metrics", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "metrics", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "AAAAAAAAAAATYk4TYk6zPy5/O8fHSqs/nYHTmB/LuT/lDfuqVnDNPwAAAAAAAAAAeQPQ5pu2pT+61RmpmFu9Pw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["tasks"], ["tasks"], ["tasks"], ["tasks"], ["tasks"], ["tasks"], ["tasks"], ["tasks"]], "hovertemplate": "concept=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "tasks", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "tasks", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "9oDZA2YP2D8TYk4TYk7TPxTvIsAgtO4/0PHti4ME7T9sSjwAQRTmP1w6DXcvq/Q/XoOZB+cI9T9GKuZWZ6T0Pw=="}, "yaxis": "y", "type": "scatter"}], "layout": {"xaxis": {"anchor": "y", "domain": [0.0, 1.0], "title": {"text": "year"}}, "yaxis": {"anchor": "x", "domain": [0.0, 1.0], "title": {"text": "occurrences (%)"}}, "legend": {"title": {"text": "concept"}, "tracegroupgap": 0}}}

Regarding the common tasks in the papers, we can see a steep increase in generation tasks, especially after 2022. This may be related to the advances in large language models such as InstructGPT and ChatGPT by the end of 2022, and the release of the first collections of foundational language models such as LLaMA in early 2023. Classification, detection, estimation, and recognition have seen a decline in interest over the years, while prediction has only recently seen a decrease. Tasks such as segmentation have remained relatively stable. The use of reasoning tasks has also increased significantly in the last year, but is still a small percentage of the total number of published papers (about 3%).

{"data": [{"customdata": [["captioning"], ["captioning"], ["captioning"], ["captioning"], ["captioning"], ["captioning"], ["captioning"], ["captioning"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "captioning", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "captioning", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "JUmSJEmS/D/yexnyexnyP3YLvxoT6fE/T9krrAn15D9UIxbeb5vkP76sEqjoLe0/mwIc7fRI8D/5LhxEj+/2Pw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["classification"], ["classification"], ["classification"], ["classification"], ["classification"], ["classification"], ["classification"], ["classification"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "classification", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "classification", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "zB4we8DsI0CmpaWlpaUhQPI0owGJcSJAlEcVG646IUCng+85dnYfQL+zUd/ZqBtAzYNzhLq/F0DMrc5IxVwWQA=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["clustering"], ["clustering"], ["clustering"], ["clustering"], ["clustering"], ["clustering"], ["clustering"], ["clustering"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "clustering", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "clustering", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "qYilIpaKAED8rMD7rMD7P4RTS55mNABAIrd0N9IiAkDRNy3AMI8AQF1Ii+URoQFA/gTLG4A2/z9VzK3OSMX4Pw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["counting"], ["counting"], ["counting"], ["counting"], ["counting"], ["counting"], ["counting"], ["counting"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "counting", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "counting", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "2FBeQ3kN5T9WLrhVLrjlP1x7phNsUvU/0PHti4ME7T9sSjwAQRTmP44BDn5u4uU/6QOqY29t2D8Vc6szUjHfPw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["detection"], ["detection"], ["detection"], ["detection"], ["detection"], ["detection"], ["detection"], ["detection"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "detection", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "detection", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "DDIuyLggKkCIh4eHh4cnQMOvxkR6oChAFWDX+idKKUCJhffbJuUlQNuvLqTF8iZAA2MoMlvUIkB/sgQ27WciQA=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["estimation"], ["estimation"], ["estimation"], ["estimation"], ["estimation"], ["estimation"], ["estimation"], ["estimation"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "estimation", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "estimation", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "vzn65uibIkA/Uo0+Uo0iQBycWvI0ox1AEnR8++IgIUAfhHFiIhIdQCZXEJMriBlAPIRNAY52GkD7yRLYtJ8XQA=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["forecasting"], ["forecasting"], ["forecasting"], ["forecasting"], ["forecasting"], ["forecasting"], ["forecasting"], ["forecasting"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "forecasting", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "forecasting", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "9oDZA2YP2D8TYk4TYk7TP0kPVM5u4dc/g0lGn20u6D87/O+7niLjP/BzE68nZe4/0wKJq16k4T8Cm/aTJbDpPw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["generation"], ["generation"], ["generation"], ["generation"], ["generation"], ["generation"], ["generation"], ["generation"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "generation", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "generation", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "+ubom6NvGECEv3CEv3AgQHumE2xSRSFAzgWTjD7bJEBNhbbHUBcnQPSPD4zsUChAtRpffs7rMECuzkjF3Mo2QA=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["identification"], ["identification"], ["identification"], ["identification"], ["identification"], ["identification"], ["identification"], ["identification"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "identification", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "identification", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "wOwBswfMAkAMIFsMIFsMQDWjAYlxcApAQj1lr7AmBEA7/O+7niIDQPKBkR0KW/s/WASE4EIk6z9LYNN+sgT2Pw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["navigation"], ["navigation"], ["navigation"], ["navigation"], ["navigation"], ["navigation"], ["navigation"], ["navigation"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "navigation", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "navigation", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "9oDZA2YP6D93FO12FO32P0kPVM5u4fc/trlgktFn6z/HDwNNB9/zPylzDHDwc/M/7oK/ihNS8j+ix3fhIHr2Pw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["prediction"], ["prediction"], ["prediction"], ["prediction"], ["prediction"], ["prediction"], ["prediction"], ["prediction"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "prediction", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "prediction", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "tbrT6k6rIUAdbFgdbFghQOBRwiz2ySRA6+RRxYarJkA7/O+7niIjQEFMriAmVyZA76N3m9yYKEDDQfT4LpwjQA=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["reasoning"], ["reasoning"], ["reasoning"], ["reasoning"], ["reasoning"], ["reasoning"], ["reasoning"], ["reasoning"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "reasoning", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "reasoning", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "2FBeQ3kNBUAk1egj1egDQFJF/XDtmQZAfPviIEHHB0DWDv/7rqcIQMPWjPOPDwRAJoMsSX2tA0AJbNpPlsALQA=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["recognition"], ["recognition"], ["recognition"], ["recognition"], ["recognition"], ["recognition"], ["recognition"], ["recognition"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "recognition", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "recognition", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "2FBeQ3kNJUCmpaWlpaUhQCbSA5WzWxxAumCS0WebG0D4XU+RqdAWQPSPD4zsUBhAQgNjKDJbFEBlCWzaTxYRQA=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["regression"], ["regression"], ["regression"], ["regression"], ["regression"], ["regression"], ["regression"], ["regression"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "regression", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "regression", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "LBWxVMRSDUAk1egj1egDQHDn+FhpwwJAKQXYtf6JAkAj1cmZzakBQPakzDHAwQNAQgNjKDJb9D+n/WQJbNr3Pw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["retrieval"], ["retrieval"], ["retrieval"], ["retrieval"], ["retrieval"], ["retrieval"], ["retrieval"], ["retrieval"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "retrieval", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "retrieval", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "DeU1lNdQCkBWLrhVLrgFQFJF/XDtmQZAL1M7NCvxAkAQhXWzekkIQFszzj8+MAZASsTocGjNCkDyXTiIHt8EQA=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["segmentation"], ["segmentation"], ["segmentation"], ["segmentation"], ["segmentation"], ["segmentation"], ["segmentation"], ["segmentation"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "segmentation", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "segmentation", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "MHvA7AGzHUDRleTQleQgQAOPEmaxTyBAgF3rnygFIECrl4Tzis4dQF5PyhwDHCBAmwIc7fRIIEDPSMXc6swgQA=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["tracking"], ["tracking"], ["tracking"], ["tracking"], ["tracking"], ["tracking"], ["tracking"], ["tracking"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "tracking", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "tracking", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "0IQ/E/5MFEBJXJdIXJcQQChbdQUeJQxAtrlgktFnC0DucuibFmAQQCVQ0Vs6DQtAQgNjKDJbBEAFNu0nS2AKQA=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["translation"], ["translation"], ["translation"], ["translation"], ["translation"], ["translation"], ["translation"], ["translation"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "translation", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "translation", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "FbFUxFIR6z8TYk4TYk4DQFJF/XDtmQZAPO8BMYS/A0BUIxbeb5sEQJEWyyNCUwFAlYMGxlBk9j+ZW52RirnzPw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["verification"], ["verification"], ["verification"], ["verification"], ["verification"], ["verification"], ["verification"], ["verification"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "verification", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "verification", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "FbFUxFIR6z8dk/Uck/XsPxTvIsAgtO4/g0lGn20u6D+EcWIiEo3XP/SPD4zsUNg/eQPQ5pu21T84iB7fhYPQPw=="}, "yaxis": "y", "type": "scatter"}], "layout": {"xaxis": {"anchor": "y", "domain": [0.0, 1.0], "title": {"text": "year"}}, "yaxis": {"anchor": "x", "domain": [0.0, 1.0], "title": {"text": "occurrences (%)"}}, "legend": {"title": {"text": "task"}, "tracegroupgap": 0}}}

Let’s dive a little deeper into the tasks.

Algorithms focused on security and privacy have been around for a while, but the number of papers published on them has increased significantly in the last year. Spoofing detection is crucial for applications such as identity recognition, where attackers may try to use photos or videos to impersonate someone else, and has seemed to gain urgency since deepfake technologies have become more prevalent.

{"data": [{"customdata": [["adversarial attack"], ["adversarial attack"], ["adversarial attack"], ["adversarial attack"], ["adversarial attack"], ["adversarial attack"], ["adversarial attack"], ["adversarial attack"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "adversarial attack", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "adversarial attack", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "9oDZA2YPuD8dk/Uck/XcP1ZX4FHCLPY/D81KvEztAEBUIxbeb5v0P8HIDoWtGfc/BITgQiQb+T/5LhxEj+/2Pw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["anomaly detection"], ["anomaly detection"], ["anomaly detection"], ["anomaly detection"], ["anomaly detection"], ["anomaly detection"], ["anomaly detection"], ["anomaly detection"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "anomaly detection", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "anomaly detection", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "9oDZA2YPyD8TYk4TYk7jP3Dn+Fhpw+I/aRG5pbuR5j8j1cmZzanhPylzDHDwc+M/mwIc7fRI4D8Vc6szUjHvPw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["disambiguation"], ["disambiguation"], ["disambiguation"], ["disambiguation"], ["disambiguation"], ["disambiguation"], ["disambiguation"], ["disambiguation"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "disambiguation", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "disambiguation", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "9oDZA2YPuD8AAAAAAAAAAC5/O8fHSrs/AAAAAAAAAACEcWIiEo2nPylzDHDwc6M/mwIc7fRIwD+UJbBpP1nCPw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["face verification"], ["face verification"], ["face verification"], ["face verification"], ["face verification"], ["face verification"], ["face verification"], ["face verification"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "face verification", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "face verification", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "9oDZA2YP2D9WLrhVLrjlPy5/O8fHSts/NqGesldYwz+EcWIiEo23P76sEqjoLb0/eQPQ5pu2pT+61RmpmFudPw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["fact checking"], ["fact checking"], ["fact checking"], ["fact checking"], ["fact checking"], ["fact checking"], ["fact checking"], ["fact checking"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "fact checking", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "fact checking", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAClzDHDwc6M/AAAAAAAAAAAAAAAAAAAAAA=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["forensics"], ["forensics"], ["forensics"], ["forensics"], ["forensics"], ["forensics"], ["forensics"], ["forensics"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "forensics", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "forensics", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "FbFUxFIR6z+Y+iGY+iHYP2OfbNUVeOQ/T9krrAn15D87/O+7niLjP/SPD4zsUNg/WASE4EIkyz8Cm/aTJbDZPw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["fraud detection"], ["fraud detection"], ["fraud detection"], ["fraud detection"], ["fraud detection"], ["fraud detection"], ["fraud detection"], ["fraud detection"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "fraud detection", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "fraud detection", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAnYHTmB/LqT8AAAAAAAAAAClzDHDwc7M/eQPQ5pu2pT+61RmpmFu9Pw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["privacy"], ["privacy"], ["privacy"], ["privacy"], ["privacy"], ["privacy"], ["privacy"], ["privacy"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "privacy", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "privacy", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "9oDZA2YP6D8dk/Uck/XcPxTvIsAgtO4/0PHti4ME7T+XwbYIZe3wP8HIDoWtGfc/IAQXItnI+T+waT9ZApv6Pw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["safety"], ["safety"], ["safety"], ["safety"], ["safety"], ["safety"], ["safety"], ["safety"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "safety", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "safety", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "2FBeQ3kN9T8TYk4TYk7zP3Dn+FhpwwJAaRG5pbuR9j+cmIhE4wX5P11Ii+URoQFAjwTxnqx//D/yXTiIHt8EQA=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["spamming"], ["spamming"], ["spamming"], ["spamming"], ["spamming"], ["spamming"], ["spamming"], ["spamming"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "spamming", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "spamming", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAClzDHDwc6M/AAAAAAAAAAC61RmpmFudPw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["spoofing"], ["spoofing"], ["spoofing"], ["spoofing"], ["spoofing"], ["spoofing"], ["spoofing"], ["spoofing"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "spoofing", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "spoofing", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "9oDZA2YPuD8TYk4TYk7TP30vhdy8DuE/nYHTmB/L2T8j1cmZzanBP/SPD4zsUMg/eQPQ5pu2xT84iB7fhYPgPw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["spotting"], ["spotting"], ["spotting"], ["spotting"], ["spotting"], ["spotting"], ["spotting"], ["spotting"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "spotting", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "spotting", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "9oDZA2YP2D8dk/Uck/XcP2OfbNUVeMQ/0PHti4ME3T8j1cmZzanRP/SPD4zsUMg/eQPQ5pu2xT+UJbBpP1nCPw=="}, "yaxis": "y", "type": "scatter"}], "layout": {"xaxis": {"anchor": "y", "domain": [0.0, 1.0], "title": {"text": "year"}}, "yaxis": {"anchor": "x", "domain": [0.0, 1.0], "title": {"text": "occurrences (%)"}}, "legend": {"title": {"text": "task"}, "tracegroupgap": 0}}}

Explainability and interpretability has gained traction in the last few years, with a significant increase in the number of papers published on the topic around 2019, following a surge in some specific conferences and workshops on model transparency, interpretability, and fairness, such as ACM FaccT and VISxAI. Explainability is crucial for building trust in AI systems and ensuring that they make decisions based on valid reasoning. One of the areas that has seen the most investment in recent years is model grounding, the process of tying the model’s predictions to specific features in the input data. This is particularly important in applications such as image classification and question answering, where it is essential to understand which parts of an input (text, image) are driving the model’s predictions.

{"data": [{"customdata": [["explainability"], ["explainability"], ["explainability"], ["explainability"], ["explainability"], ["explainability"], ["explainability"], ["explainability"]], "hovertemplate": "word=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "explainability", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "explainability", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "9oDZA2YPuD8TYk4TYk6zPy5/O8fHSts/AjGEv/Me0D8LrqN3/DDgP76sEqjoLd0/CgP2acj/0j+61RmpmFvdPw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["grounding"], ["grounding"], ["grounding"], ["grounding"], ["grounding"], ["grounding"], ["grounding"], ["grounding"]], "hovertemplate": "word=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "grounding", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "grounding", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "uSDjgowL0j9WLrhVLrjlP0kPVM5u4ec/NqGesldY4z+1v65mtH7qP11Ii+URofE/lYMGxlBk9j9ZApv2kyX6Pw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["interpretability"], ["interpretability"], ["interpretability"], ["interpretability"], ["interpretability"], ["interpretability"], ["interpretability"], ["interpretability"]], "hovertemplate": "word=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "interpretability", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "interpretability", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "uSDjgowL4j+Y+iGY+iHYP3Dn+Fhpw/I/KQXYtf6J8j+XwbYIZe3wPyM7FLZmnO8/t4JSzKn28D9C9PguHETzPw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["traceability"], ["traceability"], ["traceability"], ["traceability"], ["traceability"], ["traceability"], ["traceability"], ["traceability"]], "hovertemplate": "word=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "traceability", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "traceability", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAC61RmpmFudPw=="}, "yaxis": "y", "type": "scatter"}], "layout": {"legend": {"title": {"text": "word"}, "tracegroupgap": 0}, "xaxis": {"anchor": "y", "domain": [0.0, 1.0], "title": {"text": "year"}}, "yaxis": {"anchor": "x", "domain": [0.0, 1.0], "title": {"text": "occurrences (%)"}}}}

Visual tasks such as image denoising have received a lot of attention in recent years, with many papers published on the topic. This may be due to the increasing importance of image quality in computer vision applications, the development of new techniques to improve image quality, and the increased capacity of visual models to handle larger inputs. This category of tasks also includes deblurring, dehazing, demoireing, deraining, and others. Image processing and image generation tasks have also increased significantly.

{"data": [{"customdata": [["colorization"], ["colorization"], ["colorization"], ["colorization"], ["colorization"], ["colorization"], ["colorization"], ["colorization"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "colorization", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "colorization", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "NOHPhD8T3j8dk/Uck/XMPy5/O8fHSts/AjGEv/Me0D+EcWIiEo23P/SPD4zsUMg/eQPQ5pu2tT+UJbBpP1nCPw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["denoising"], ["denoising"], ["denoising"], ["denoising"], ["denoising"], ["denoising"], ["denoising"], ["denoising"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "denoising", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "denoising", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "5+ibo2+OBkAdk/Uck/UMQB4lzGKfbA1A6il7hTWhDkAHXUtAUZkLQPSPD4zsUAhAPIRNAY52CkB1RirmVucVQA=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["editing"], ["editing"], ["editing"], ["editing"], ["editing"], ["editing"], ["editing"], ["editing"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "editing", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "editing", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "9oDZA2YP6D/bxovaxovqP3Dn+Fhpw+I/Qj1lr7Am9D/HDwNNB9/zP4vlEaGp9vs/zYNzhLq/B0CNVMytzkgQQA=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["image enhancement"], ["image enhancement"], ["image enhancement"], ["image enhancement"], ["image enhancement"], ["image enhancement"], ["image enhancement"], ["image enhancement"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "image enhancement", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "image enhancement", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "AAAAAAAAAACY+iGY+iHYPy5/O8fHSss/nYHTmB/L2T+1v65mtH7aP/SPD4zsUNg/CgP2acj/0j8Vc6szUjHfPw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["image filling"], ["image filling"], ["image filling"], ["image filling"], ["image filling"], ["image filling"], ["image filling"], ["image filling"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "image filling", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "image filling", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "JUmSJEmS/D/8rMD7rMD7P4BBaL2RoQBAnYHTmB/LCUD4XU+RqdAGQMDBz028nghAQgNjKDJbBEC1nyyBTfsLQA=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["image generation"], ["image generation"], ["image generation"], ["image generation"], ["image generation"], ["image generation"], ["image generation"], ["image generation"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "image generation", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "image generation", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "2FBeQ3kN5T8dk/Uck/X8Py5/O8fHSvs/HGkRuaW7AUALrqN3/DAAQIrXkzLHAP8/lYMGxlBkBkC+CwfR47sOQA=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["image retrieval"], ["image retrieval"], ["image retrieval"], ["image retrieval"], ["image retrieval"], ["image retrieval"], ["image retrieval"], ["image retrieval"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "image retrieval", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "image retrieval", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "uSDjgowL8j9fX19fX1/vP2OfbNUVePQ/XHXyqGLD9T+XwbYIZe3wP8TkCmJyBeE/IAQXItnI6T+UJbBpP1niPw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["image segmentation"], ["image segmentation"], ["image segmentation"], ["image segmentation"], ["image segmentation"], ["image segmentation"], ["image segmentation"], ["image segmentation"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "image segmentation", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "image segmentation", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "NOHPhD8T7j81SIM0SIP0P1x7phNsUvU/trlgktFn6z+cmIhE4wXpP/erC2mxPPI/CgP2acj/8j9VzK3OSMX4Pw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["image to image"], ["image to image"], ["image to image"], ["image to image"], ["image to image"], ["image to image"], ["image to image"], ["image to image"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "image to image", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "image to image", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "9oDZA2YP2D93FO12FO32P4RTS55mNABAT9krrAn19D8QhXWzekn4P4vlEaGp9us/CgP2acj/4j+n/WQJbNrnPw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["localization"], ["localization"], ["localization"], ["localization"], ["localization"], ["localization"], ["localization"], ["localization"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "localization", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "localization", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "2FBeQ3kNFUAD77MC77MSQChbdQUeJQxAaRG5pbuRBkBxIQ48vywOQL2l03D3sg5ASsTocGjNCkC3OiMVc6sMQA=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["matching"], ["matching"], ["matching"], ["matching"], ["matching"], ["matching"], ["matching"], ["matching"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "matching", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "matching", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "Cn8m/JnwGUCcm5ubm5sTQHJwasnTjBJASYvILd2NFEDbIpS1w/8WQBCM7FDYmhNAV+PLz3ndFEA/WQKb9pMSQA=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["odometry"], ["odometry"], ["odometry"], ["odometry"], ["odometry"], ["odometry"], ["odometry"], ["odometry"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "odometry", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "odometry", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "9oDZA2YPyD+Y+iGY+iHYP2OfbNUVeNQ/0PHti4ME3T9UIxbeb5vUP/SPD4zsUMg/WASE4EIkyz+UJbBpP1nCPw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["quality assessment"], ["quality assessment"], ["quality assessment"], ["quality assessment"], ["quality assessment"], ["quality assessment"], ["quality assessment"], ["quality assessment"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "quality assessment", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "quality assessment", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "9oDZA2YPyD8TYk4TYk7TPy5/O8fHSss/nYHTmB/LyT9UIxbeb5vUP44BDn5u4tU/mwIc7fRI0D84iB7fhYPgPw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["reconstruction"], ["reconstruction"], ["reconstruction"], ["reconstruction"], ["reconstruction"], ["reconstruction"], ["reconstruction"], ["reconstruction"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "reconstruction", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "reconstruction", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "vYbyGsprEkDv2p/u2p8WQGOfbNUVeBRAgKIUYNf6F0DN5tSIhfcbQI3zjw+M7BhA3XI9f4LlIUCUJbBpP9keQA=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["removal"], ["removal"], ["removal"], ["removal"], ["removal"], ["removal"], ["removal"], ["removal"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "removal", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "removal", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "uSDjgowL8j9WLrhVLrj1P0kPVM5u4fc/T9krrAn19D/HDwNNB9/zPyM7FLZmnO8/xwReXRbb7T/mVmekYm7xPw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["style transfer"], ["style transfer"], ["style transfer"], ["style transfer"], ["style transfer"], ["style transfer"], ["style transfer"], ["style transfer"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "style transfer", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "style transfer", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "uSDjgowL4j9fX19fX1/vPxTvIsAgtO4/HGkRuaW74T/HDwNNB9/zPylzDHDwc+M/xwReXRbb3T/mVmekYm7hPw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["super resolution"], ["super resolution"], ["super resolution"], ["super resolution"], ["super resolution"], ["super resolution"], ["super resolution"], ["super resolution"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "super resolution", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "super resolution", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "2FBeQ3kNBUDrOSbrOSYLQEX9cO2ZTghAsGv9E6UAC0C0/HHkSr4QQFkeEZpqvwpAQgNjKDJbBECwaT9ZApsKQA=="}, "yaxis": "y", "type": "scatter"}], "layout": {"xaxis": {"anchor": "y", "domain": [0.0, 1.0], "title": {"text": "year"}}, "yaxis": {"anchor": "x", "domain": [0.0, 1.0], "title": {"text": "occurrences (%)"}}, "legend": {"title": {"text": "task"}, "tracegroupgap": 0}}}

Language tasks have also seen a fluctuation in the number of papers published over the past few years, particularly those that focus on dialogue and conversation. By using a conversational interface, users can interact with AI systems in a more natural and intuitive way, leading to better user experiences and more effective communication. This has led to a surge in research on dialog systems, including chatbots, virtual assistants, and other conversational agents. The development of large-scale language models has also played a significant role in this trend, as these models have demonstrated impressive capabilities in generating human-like text and understanding context.

{"data": [{"customdata": [["dialog"], ["dialog"], ["dialog"], ["dialog"], ["dialog"], ["dialog"], ["dialog"], ["dialog"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "dialog", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "dialog", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "9oDZA2YP2D/RleTQleTgPxTvIsAgtN4/nYHTmB/L2T/lDfuqVnDNPylzDHDwc8M/mwIc7fRIwD+dkYq51RnlPw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["language translation"], ["language translation"], ["language translation"], ["language translation"], ["language translation"], ["language translation"], ["language translation"], ["language translation"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "language translation", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "language translation", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "AAAAAAAAAAATYk4TYk6zPwAAAAAAAAAAnYHTmB/LqT+EcWIiEo2nPylzDHDwc7M/eQPQ5pu2pT+61RmpmFudPw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["question answering"], ["question answering"], ["question answering"], ["question answering"], ["question answering"], ["question answering"], ["question answering"], ["question answering"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "question answering", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "question answering", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "FbFUxFIR6z8TYk4TYk7zP3Dn+Fhpw+I/nYHTmB/L2T+EcWIiEo3XP/SPD4zsUNg/CgP2acj/4j9C9PguHETjPw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["summarization"], ["summarization"], ["summarization"], ["summarization"], ["summarization"], ["summarization"], ["summarization"], ["summarization"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "summarization", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "summarization", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "9oDZA2YP6D+Y+iGY+iHYP2OfbNUVeMQ/nYHTmB/LuT+EcWIiEo23PylzDHDwc8M/eQPQ5pu2tT+UJbBpP1nCPw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["text generation"], ["text generation"], ["text generation"], ["text generation"], ["text generation"], ["text generation"], ["text generation"], ["text generation"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "text generation", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "text generation", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAACEcWIiEo2nPwAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA=="}, "yaxis": "y", "type": "scatter"}], "layout": {"legend": {"title": {"text": "task"}, "tracegroupgap": 0}, "xaxis": {"anchor": "y", "domain": [0.0, 1.0], "title": {"text": "year"}}, "yaxis": {"anchor": "x", "domain": [0.0, 1.0], "title": {"text": "occurrences (%)"}}}}

Multimodal tasks are one of the current trends in artificial intelligence. These tasks involve the combination of different modalities, such as audio, text, and images, to improve the performance of models and to solve problems that require a deeper understanding of the intermodality of the world. The number of papers published on these tasks has increased significantly in recent years, with a particular focus on tasks such as image-text alignment, image synthesis, video synthesis, and visual question answering. This trend is likely to continue as researchers explore new ways to combine different modalities in novel ways and improve the performance of models.

{"data": [{"customdata": [["alignment"], ["alignment"], ["alignment"], ["alignment"], ["alignment"], ["alignment"], ["alignment"], ["alignment"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "alignment", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "alignment", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "5+ibo2+OBkAD77MC77MCQGCNifRA5QRAiZepHZqVCEAo6V5T4gEQQHYobM04/xJAV+PLz3ndFECuzkjF3OoZQA=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["audio synthesis"], ["audio synthesis"], ["audio synthesis"], ["audio synthesis"], ["audio synthesis"], ["audio synthesis"], ["audio synthesis"], ["audio synthesis"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "audio synthesis", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "audio synthesis", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAClzDHDwc7M/eQPQ5pu2tT+61RmpmFudPw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["captioning"], ["captioning"], ["captioning"], ["captioning"], ["captioning"], ["captioning"], ["captioning"], ["captioning"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "captioning", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "captioning", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "JUmSJEmS/D/yexnyexnyP3YLvxoT6fE/T9krrAn15D9UIxbeb5vkP76sEqjoLe0/mwIc7fRI8D/5LhxEj+/2Pw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["image synthesis"], ["image synthesis"], ["image synthesis"], ["image synthesis"], ["image synthesis"], ["image synthesis"], ["image synthesis"], ["image synthesis"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "image synthesis", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "image synthesis", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "2FBeQ3kN5T8dk/Uck/X8Py5/O8fHSvs/HGkRuaW7AUALrqN3/DAAQIrXkzLHAP8/lYMGxlBkBkC+CwfR47sOQA=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["referring expression comprehension"], ["referring expression comprehension"], ["referring expression comprehension"], ["referring expression comprehension"], ["referring expression comprehension"], ["referring expression comprehension"], ["referring expression comprehension"], ["referring expression comprehension"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "referring expression comprehension", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "referring expression comprehension", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "9oDZA2YPuD8TYk4TYk7DPy5/O8fHSrs/NqGesldYwz+EcWIiEo23PylzDHDwc6M/CgP2acj/0j+61RmpmFu9Pw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["video question answering"], ["video question answering"], ["video question answering"], ["video question answering"], ["video question answering"], ["video question answering"], ["video question answering"], ["video question answering"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "video question answering", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "video question answering", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "AAAAAAAAAAATYk4TYk6zPy5/O8fHSqs/NqGesldYwz+EcWIiEo3HP/SPD4zsUMg/mwIc7fRI4D9LYNN+sgTWPw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["video synthesis"], ["video synthesis"], ["video synthesis"], ["video synthesis"], ["video synthesis"], ["video synthesis"], ["video synthesis"], ["video synthesis"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "video synthesis", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "video synthesis", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "AAAAAAAAAAATYk4TYk7TP0kPVM5u4dc/NqGesldY0z8j1cmZzanRP/SPD4zsUNg/xwReXRbb3T+P78JB9PjwPw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["visual grounding"], ["visual grounding"], ["visual grounding"], ["visual grounding"], ["visual grounding"], ["visual grounding"], ["visual grounding"], ["visual grounding"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "visual grounding", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "visual grounding", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "9oDZA2YPuD8dk/Uck/XMPy5/O8fHSrs/nYHTmB/LuT8j1cmZzanRPylzDHDwc9M/CgP2acj/0j9eOIge34XbPw=="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["visual question answering"], ["visual question answering"], ["visual question answering"], ["visual question answering"], ["visual question answering"], ["visual question answering"], ["visual question answering"], ["visual question answering"]], "hovertemplate": "task=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "visual question answering", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "visual question answering", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+MH5AflB+YH5wfoBw=="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "yLgg44KM8z8+eSo+eSr+P1x7phNsUvU/D81KvEzt8D8LrqN3/DDwP76sEqjoLe0/CgP2acj/8j9QlsCm/WT3Pw=="}, "yaxis": "y", "type": "scatter"}], "layout": {"xaxis": {"anchor": "y", "domain": [0.0, 1.0], "title": {"text": "year"}}, "yaxis": {"anchor": "x", "domain": [0.0, 1.0], "title": {"text": "occurrences (%)"}}, "legend": {"title": {"text": "task"}, "tracegroupgap": 0}}}

Here we focus on analyzing the use of some keywords in the LLM papers. More specifically:

- Chain-of-Thought, Tree-of-Thought, and any of-Thought variations - these are prompting techniques that help the model to break down complex tasks into smaller, more manageable steps, allowing it to reason through the problem more effectively;

- Agent - refers to the use of LLMs as agents that can perform tasks autonomously, often in conjunction with other tools or systems;

- Distillation - a technique used to compress large models into smaller, more efficient ones while retaining their performance;

- Few-shot prompting - a prompting technique that provides the model with a few examples of the task at hand, allowing it to generalize and perform well on similar tasks;

- Fine-tuning - the process of training a pre-trained model on a specific task or dataset to improve its performance;

- Reinforcement Learning (RL) - a type of machine learning where an agent learns to make decisions by receiving feedback from its environment in the form of rewards or penalties;

- Retrieval Augmented Generation (RAG) - a technique that combines retrieval-based methods with generative models to improve the performance of language models on specific tasks;

- Self-Instruct - a technique that allows models to learn from their own outputs, improving their performance over time;

- Tokenizer - a component of language models that converts text into a format that the model can understand, often by breaking it down into smaller units called tokens;

- Tool - refers to the use of external tools or systems in conjunction with LLMs to perform tasks more effectively;

- Zero-shot prompting - a prompting technique that allows the model to perform tasks without any prior examples or training on that specific task.

Few-shot and zero-shot prompting have lost the interest of the academic community in favor of RAG, thought processes, and novel fine-tuning techniques. Interest in creating LLM agents that can tackle harder tasks and use tools is one of the hottest topics in the field.

{"data": [{"customdata": [["* of thought"], ["* of thought"], ["* of thought"], ["* of thought"], ["* of thought"], ["* of thought"], ["* of thought"]], "hovertemplate": "word=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "* of thought", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "* of thought", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+QH5QfmB+cH6Ac="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAfDQhL2wVBEA="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["agent"], ["agent"], ["agent"], ["agent"], ["agent"], ["agent"], ["agent"]], "hovertemplate": "word=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "agent", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "agent", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+QH5QfmB+cH6Ac="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAABbUZmK5zHEA="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["distillation"], ["distillation"], ["distillation"], ["distillation"], ["distillation"], ["distillation"], ["distillation"]], "hovertemplate": "word=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "distillation", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "distillation", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+QH5QfmB+cH6Ac="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAABJQI7jOI7jOBZAus6xRiIgDkA="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["few shot"], ["few shot"], ["few shot"], ["few shot"], ["few shot"], ["few shot"], ["few shot"]], "hovertemplate": "word=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "few shot", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "few shot", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+QH5QfmB+cH6Ac="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAKuqqqqqqjBAMU653d/BJUA="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["finetuning"], ["finetuning"], ["finetuning"], ["finetuning"], ["finetuning"], ["finetuning"], ["finetuning"]], "hovertemplate": "word=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "finetuning", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "finetuning", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+QH5QfmB+cH6Ac="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAI7jOI7jOCZAus6xRiIgLkA="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["reinforcement learning"], ["reinforcement learning"], ["reinforcement learning"], ["reinforcement learning"], ["reinforcement learning"], ["reinforcement learning"], ["reinforcement learning"]], "hovertemplate": "word=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "reinforcement learning", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "reinforcement learning", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+QH5QfmB+cH6Ac="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "AAAAAAAASUAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAKuqqqqqqjBA3P0dXPaGQEA="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["retrieval augmented generation"], ["retrieval augmented generation"], ["retrieval augmented generation"], ["retrieval augmented generation"], ["retrieval augmented generation"], ["retrieval augmented generation"], ["retrieval augmented generation"]], "hovertemplate": "word=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "retrieval augmented generation", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "retrieval augmented generation", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+QH5QfmB+cH6Ac="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "AAAAAAAAOUAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAABJQKuqqqqqqkBAayQC4qMJQ0A="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["tokenizer"], ["tokenizer"], ["tokenizer"], ["tokenizer"], ["tokenizer"], ["tokenizer"], ["tokenizer"]], "hovertemplate": "word=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "tokenizer", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "tokenizer", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+QH5QfmB+cH6Ac="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAfDQhL2wV9D8="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["tool"], ["tool"], ["tool"], ["tool"], ["tool"], ["tool"], ["tool"]], "hovertemplate": "word=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "tool", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "tool", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+QH5QfmB+cH6Ac="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "AAAAAAAAOUAAAAAAAAAAAAAAAAAAAElAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAMU653d/BFUA="}, "yaxis": "y", "type": "scatter"}, {"customdata": [["zero shot"], ["zero shot"], ["zero shot"], ["zero shot"], ["zero shot"], ["zero shot"], ["zero shot"]], "hovertemplate": "word=%{customdata[0]}<br>year=%{x}<br>occurrences (%)=%{y:.3f}<extra></extra>", "legendgroup": "zero shot", "line": {"dash": "solid"}, "marker": {"symbol": "circle"}, "mode": "lines+markers", "name": "zero shot", "orientation": "v", "showlegend": true, "x": {"dtype": "i2", "bdata": "4QfiB+QH5QfmB+cH6Ac="}, "xaxis": "x", "y": {"dtype": "f8", "bdata": "AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAABJQBzHcRzHcUNAoifVVzI/M0A="}, "yaxis": "y", "type": "scatter"}], "layout": {"legend": {"title": {"text": "word"}, "tracegroupgap": 0}, "xaxis": {"anchor": "y", "domain": [0.0, 1.0], "title": {"text": "year"}}, "yaxis": {"anchor": "x", "domain": [0.0, 1.0], "title": {"text": "occurrences (%)"}}}}

Information about Authors

Now let’s look at the authors of the papers. This first graph shows the number of papers published by each author. As we can see, most authors have published only one paper at the conference. Out of 33,861 authors, only 1,308 have 10 or more accepted papers.

{"data": [{"hovertemplate": "Number of papers=%{text}<br>Authors=%{y}<extra></extra>", "legendgroup": "", "marker": {"pattern": {"shape": ""}}, "name": "", "orientation": "v", "showlegend": false, "text": {"dtype": "f8", "bdata": "AAAAAADAYEAAAAAAAMBdQAAAAAAAAFlAAAAAAACAVUAAAAAAAMBUQAAAAAAAAFRAAAAAAADAU0AAAAAAAMBSQAAAAAAAwFFAAAAAAACAUUAAAAAAAEBRQAAAAAAAgFBAAAAAAABAUEAAAAAAAABQQAAAAAAAgE9AAAAAAAAAT0AAAAAAAIBOQAAAAAAAgE1AAAAAAAAATUAAAAAAAABMQAAAAAAAgEtAAAAAAAAAS0AAAAAAAIBKQAAAAAAAAEpAAAAAAACASUAAAAAAAABJQAAAAAAAgEhAAAAAAAAASEAAAAAAAIBHQAAAAAAAAEdAAAAAAAAARkAAAAAAAIBFQAAAAAAAAEVAAAAAAACAREAAAAAAAABEQAAAAAAAgENAAAAAAAAAQ0AAAAAAAIBCQAAAAAAAAEJAAAAAAACAQUAAAAAAAABBQAAAAAAAgEBAAAAAAAAAQEAAAAAAAAA/QAAAAAAAAD5AAAAAAAAAPUAAAAAAAAA8QAAAAAAAADtAAAAAAAAAOkAAAAAAAAA5QAAAAAAAADhAAAAAAAAAN0AAAAAAAAA2QAAAAAAAADVAAAAAAAAANEAAAAAAAAAzQAAAAAAAADJAAAAAAAAAMUAAAAAAAAAwQAAAAAAAAC5AAAAAAAAALEAAAAAAAAAqQAAAAAAAAChAAAAAAAAAJkAAAAAAAAAkQAAAAAAAACJAAAAAAAAAIEAAAAAAAAAcQAAAAAAAABhAAAAAAAAAFEAAAAAAAAAQQAAAAAAAAAhAAAAAAAAAAEAAAAAAAADwPw=="}, "textposition": "auto", "x": {"dtype": "i2", "bdata": "hgB3AGQAVgBTAFAATwBLAEcARgBFAEIAQQBAAD8APgA9ADsAOgA4ADcANgA1ADQAMwAyADEAMAAvAC4ALAArACoAKQAoACcAJgAlACQAIwAiACEAIAAfAB4AHQAcABsAGgAZABgAFwAWABUAFAATABIAEQAQAA8ADgANAAwACwAKAAkACAAHAAYABQAEAAMAAgABAA=="}, "xaxis": "x", "y": {"dtype": "i2", "bdata": "AQABAAEAAQABAAEAAQABAAEAAQABAAEAAQABAAIAAQABAAEABQAEAAIAAQABAAMAAQACAAIABAABAAIABAAEAAQAAgAHAAgACwAGAAsABAAFAAYAFAALABIACwASABEAEgAVAB4AGAAWACQAIgAyACMALABAAFEAWABrAHsAlgCzAAIBKgGeAUMCSQOXBW8J3RU8UQ=="}, "yaxis": "y", "type": "bar"}], "layout": {"xaxis": {"anchor": "y", "domain": [0.0, 1.0], "title": {"text": "Number of papers"}}, "yaxis": {"anchor": "x", "domain": [0.0, 1.0], "title": {"text": "Authors"}}, "legend": {"tracegroupgap": 0}, "barmode": "relative"}}

Here are the top 10 authors with the most papers:

| Author | Papers |

|---|---|

| Luc Van Gool | 134 |

| Radu Timofte | 119 |

| Lei Zhang | 100 |

| Yi Yang | 86 |

| Yu Qiao | 83 |

| Dacheng Tao | 80 |

| Ming-Hsuan Yang | 79 |

| Qi Tian | 75 |

| Marc Pollefeys | 71 |

| Xiaogang Wang | 70 |

Now let’s look at the number of authors per paper. Most of the papers have between 2 and 7 authors, but there are a few with a large number of authors, such as Why Is the Winner the Best?, which has 125 authors, and The Ninth NTIRE 2024 Efficient Super-Resolution Challenge Report, with a staggering 134 authors. The former is a multi-center study of all 80 competitions held as part of IEEE ISBI 2021 and MICCAI 2021, while the latter is a report summarizing the results of the NTIRE 2024 challenge, a competition held at the CVPR conference.

{"data": [{"hovertemplate": "Number of authors=%{x}<br>Number of papers=%{text}<extra></extra>", "legendgroup": "", "marker": {"pattern": {"shape": ""}}, "name": "", "orientation": "v", "showlegend": false, "text": {"dtype": "f8", "bdata": "AAAAAAAgZ0AAAAAAAByWQAAAAAAAoqZAAAAAAAAArEAAAAAAAE6pQAAAAAAA2KJAAAAAAADklkAAAAAAALiIQAAAAAAA0HhAAAAAAADAaUAAAAAAAIBcQAAAAAAAAFBAAAAAAAAAOUAAAAAAAAA3QAAAAAAAACRAAAAAAAAAJkAAAAAAAAAQQAAAAAAAAABAAAAAAAAA8D8AAAAAAAAQQAAAAAAAAABAAAAAAAAAAEAAAAAAAAAQQAAAAAAAAAhAAAAAAAAA8D8AAAAAAAAIQAAAAAAAAPA/AAAAAAAA8D8AAAAAAADwPwAAAAAAAPA/AAAAAAAAAEAAAAAAAAAAQAAAAAAAAPA/AAAAAAAA8D8AAAAAAAAAQAAAAAAAAPA/AAAAAAAA8D8AAAAAAADwPwAAAAAAAPA/AAAAAAAA8D8AAAAAAADwPwAAAAAAAPA/AAAAAAAACEAAAAAAAADwPwAAAAAAAPA/AAAAAAAACEAAAAAAAADwPwAAAAAAAPA/AAAAAAAA8D8AAAAAAADwPwAAAAAAAPA/AAAAAAAA8D8AAAAAAADwPwAAAAAAAPA/AAAAAAAA8D8AAAAAAADwPwAAAAAAAPA/AAAAAAAA8D8="}, "textposition": "auto", "x": {"dtype": "i2", "bdata": "AQACAAMABAAFAAYABwAIAAkACgALAAwADQAOAA8AEAARABIAEwAUABUAFgAXABgAGwAcAB8AIQAiACMAJAAlACcAKQAqACsALQAwADEANQA3ADgAOgBCAEMARABOAE8AVQBYAF0AZABlAGwAcQBzAH0AhgA="}, "xaxis": "x", "y": {"dtype": "i2", "bdata": "uQCHBVELAA6nDGwJuQUXA40BzgByAEAAGQAXAAoACwAEAAIAAQAEAAIAAgAEAAMAAQADAAEAAQABAAEAAgACAAEAAQACAAEAAQABAAEAAQABAAEAAwABAAEAAwABAAEAAQABAAEAAQABAAEAAQABAAEAAQA="}, "yaxis": "y", "type": "bar"}], "layout": {"xaxis": {"anchor": "y", "domain": [0.0, 1.0], "title": {"text": "Number of authors"}}, "yaxis": {"anchor": "x", "domain": [0.0, 1.0], "title": {"text": "Number of papers"}}, "legend": {"tracegroupgap": 0}, "barmode": "relative"}}

Since most papers have multiple authors, it is quite common to see some authors constantly collaborating with each other. The most common pair of authors is Jiwen Lu and Jie Zhou, who have collaborated on 57 papers together. The second most common pair is Luc Van Gool and Radu Timofte with 43 papers together, followed by Tao Xiang and Yi-Zhe Song with 38 papers. The top 10 most frequent pairs of authors are:

| Author 1 | Author 2 | Papers |

|---|---|---|

| Jiwen Lu | Jie Zhou | 57 |

| Luc Van Gool | Radu Timofte | 43 |

| Tao Xiang | Yi-Zhe Song | 38 |

| Fahad Shahbaz Khan | Salman Khan | 33 |

| Ting Yao | Tao Mei | 32 |

| Xiaogang Wang | Hongsheng Li | 28 |

| Shiguang Shan | Xilin Chen | 27 |

| Richa Singh | Mayank Vatsa | 26 |

| Dong Chen | Fang Wen | 24 |

| Yi-Zhe Song | Ayan Kumar Bhunia | 24 |

Although it is quite rare for a paper to have a single author, 185 papers fall into this category. A few worthy mentions are research that introduced novel loss functions (Jonathan T. Barron, Takumi Kobayashi) and improved transformer architectures and post-training techniques (Takumi Kobayashi, Jing Ma). In this table we can see the authors with the most papers where they are the only author:

| Author | Papers |

|---|---|

| Takumi Kobayashi | 4 |

| Anant Khandelwal, Takuhiro Kaneko | 3 |

| Andrey V. Savchenko, Chong Yu, Dimitrios Kollias, Edgar A. Bernal, Jamie Hayes, Magnus Oskarsson, Ming Li, Oleksii Sidorov, Ren Yang, Rowel Atienza, Sanghwa Hong, Satoshi Ikehata, Shunta Maeda, Stamatios Lefkimmiatis, Ying Zhao | 2 |

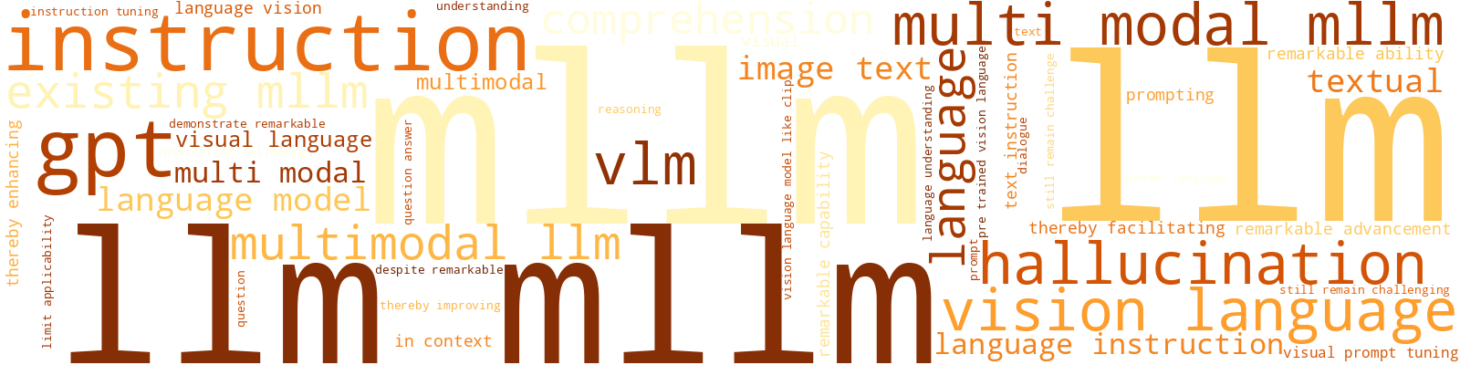

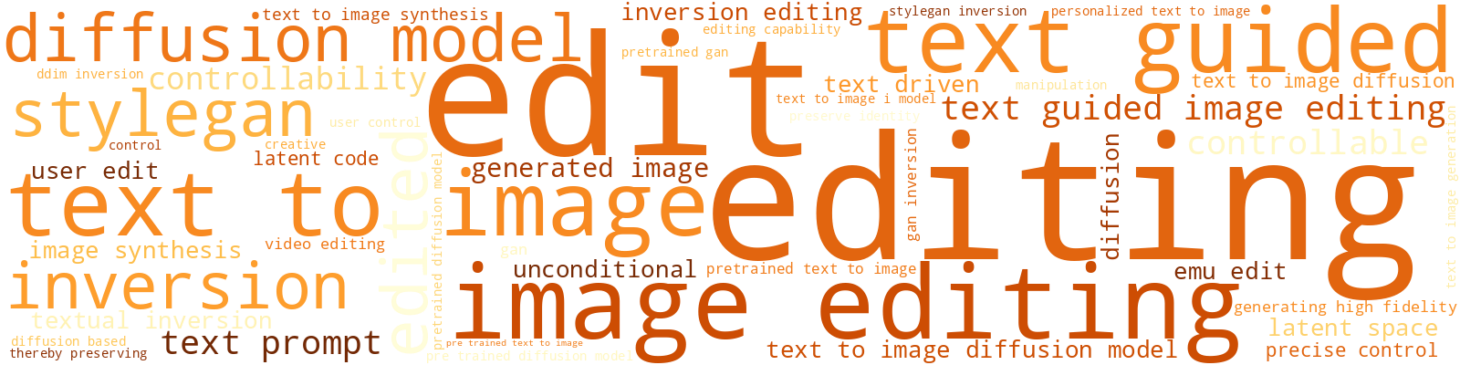

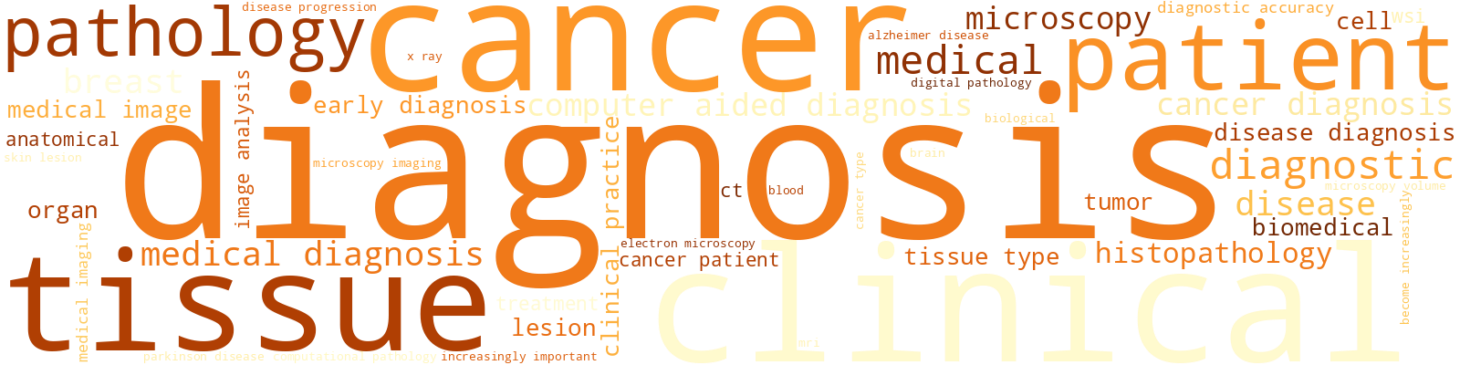

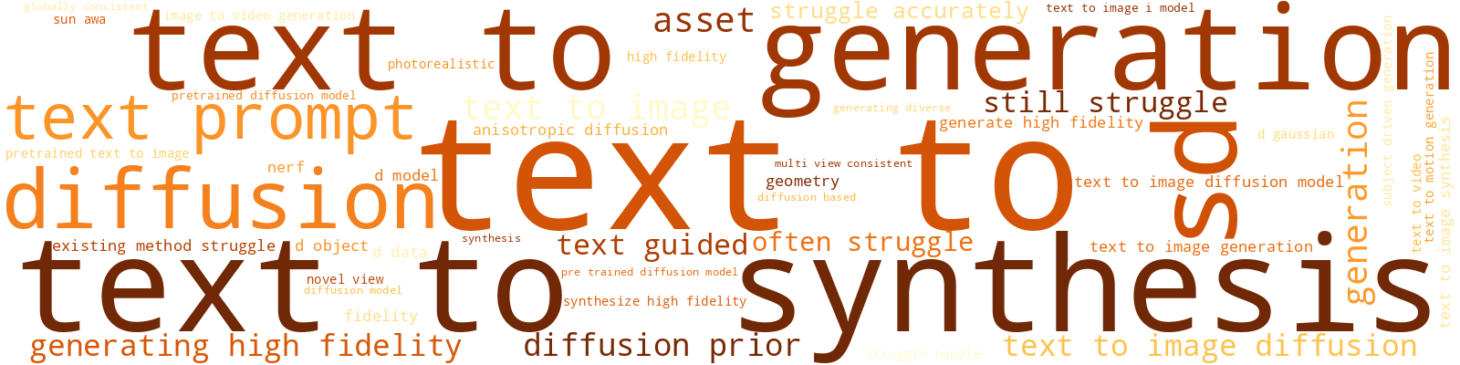

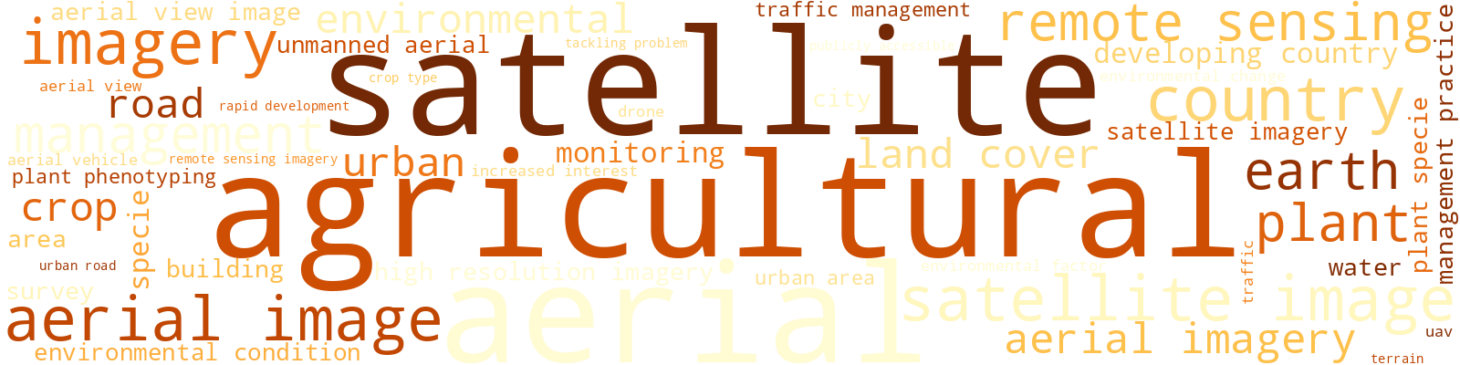

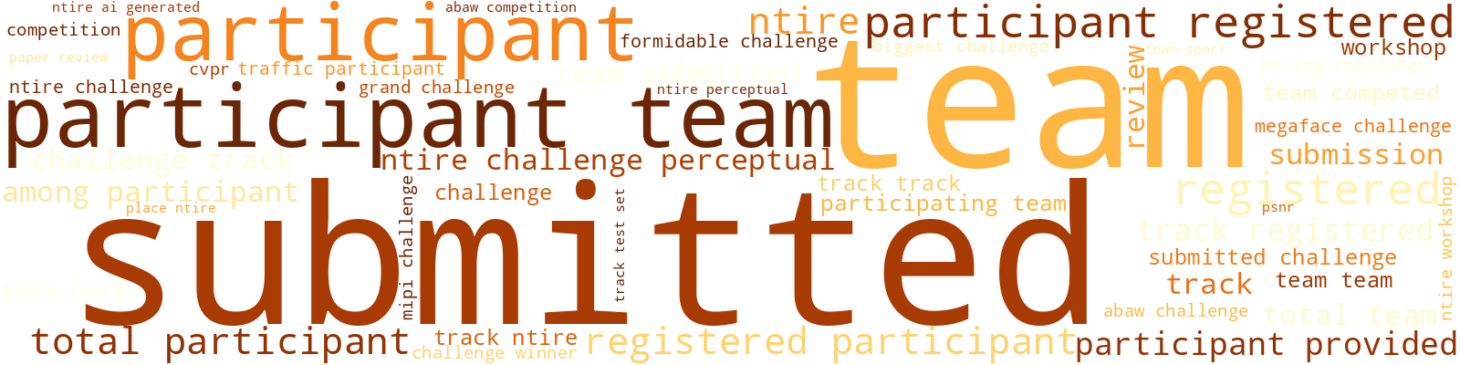

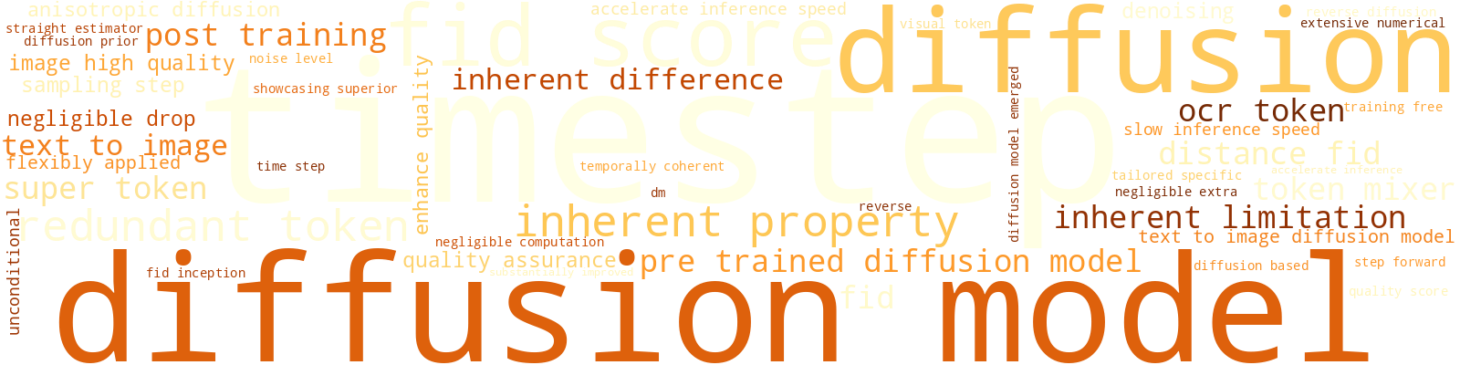

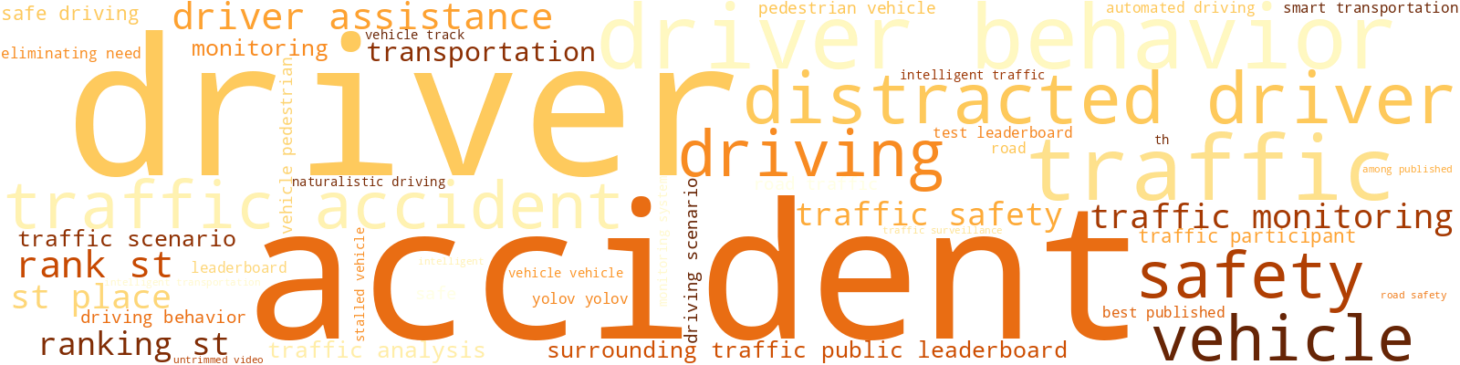

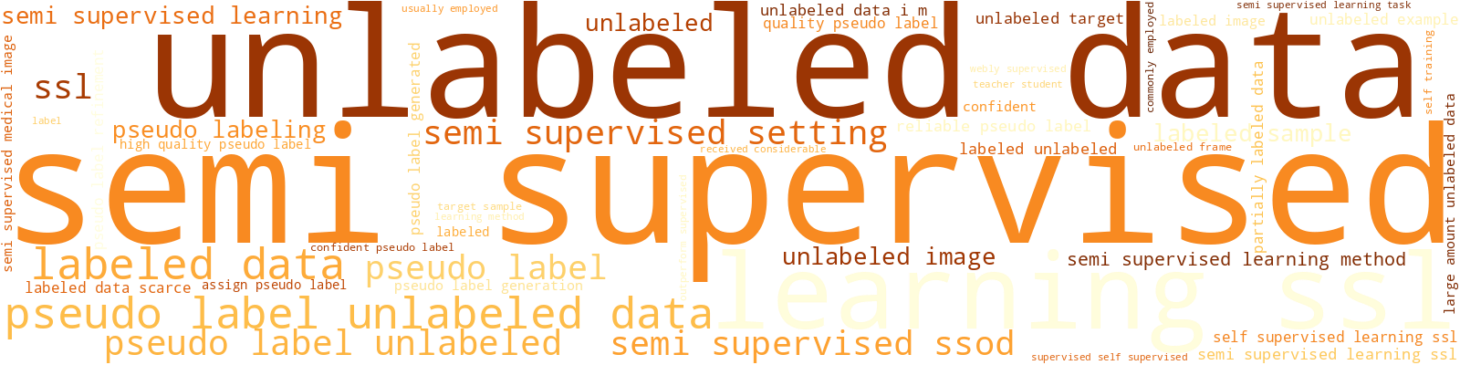

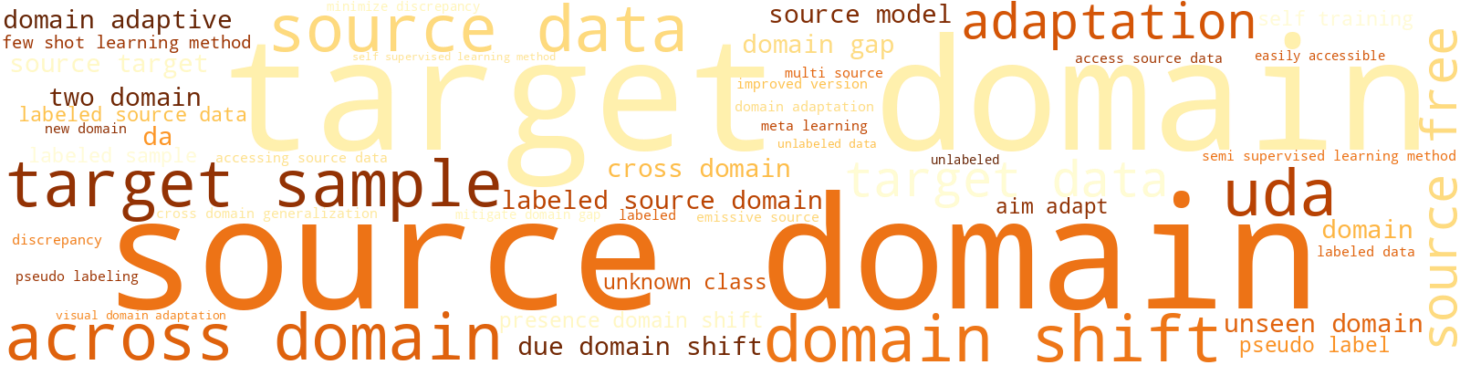

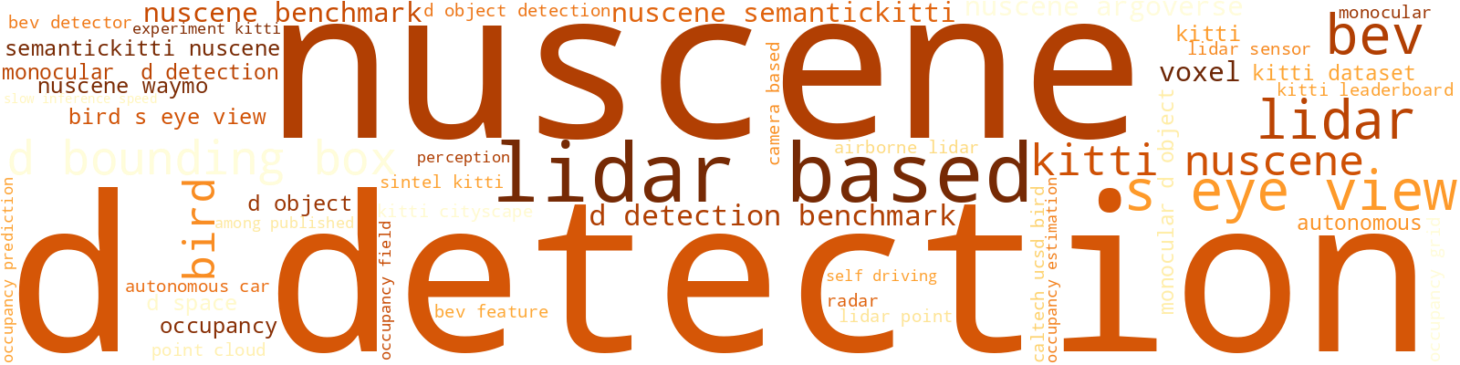

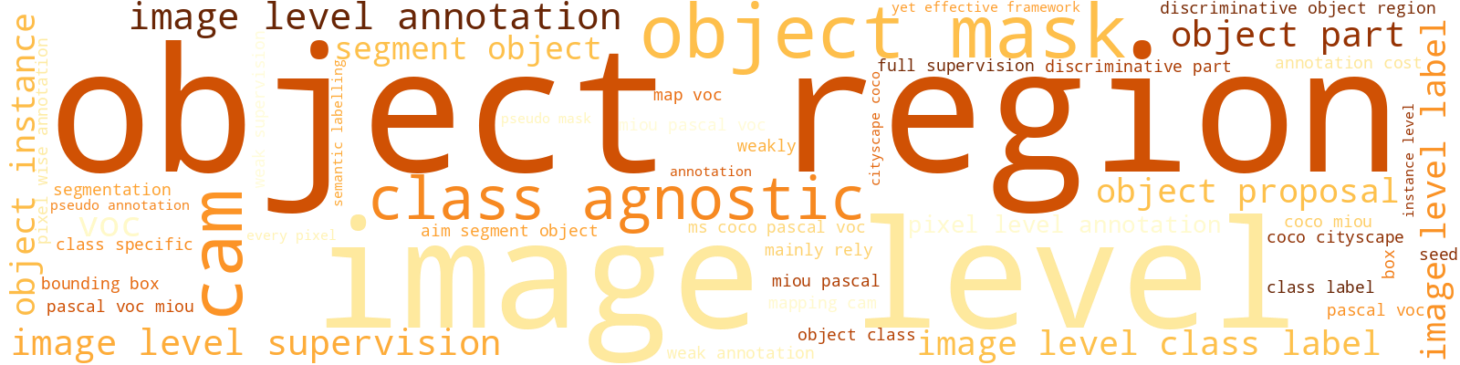

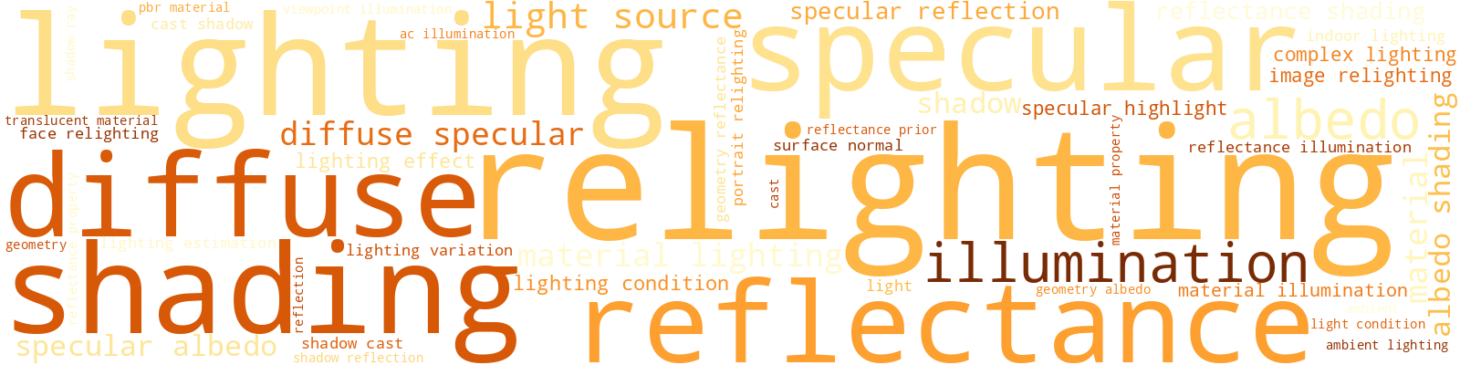

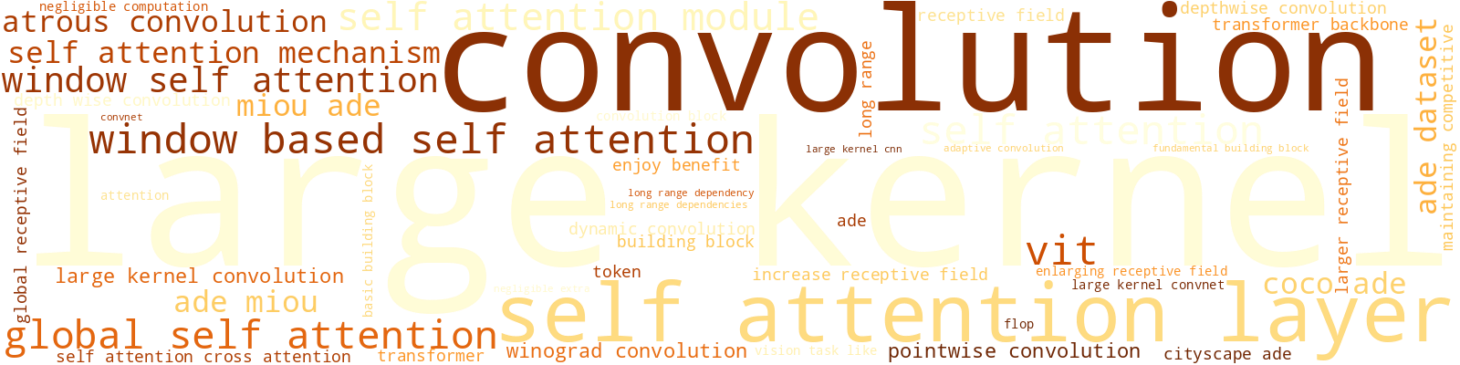

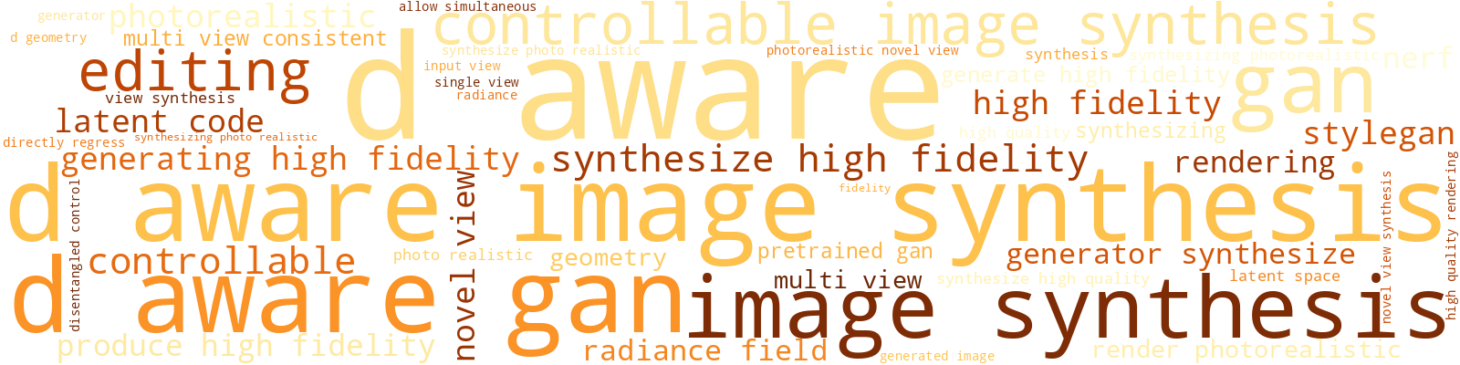

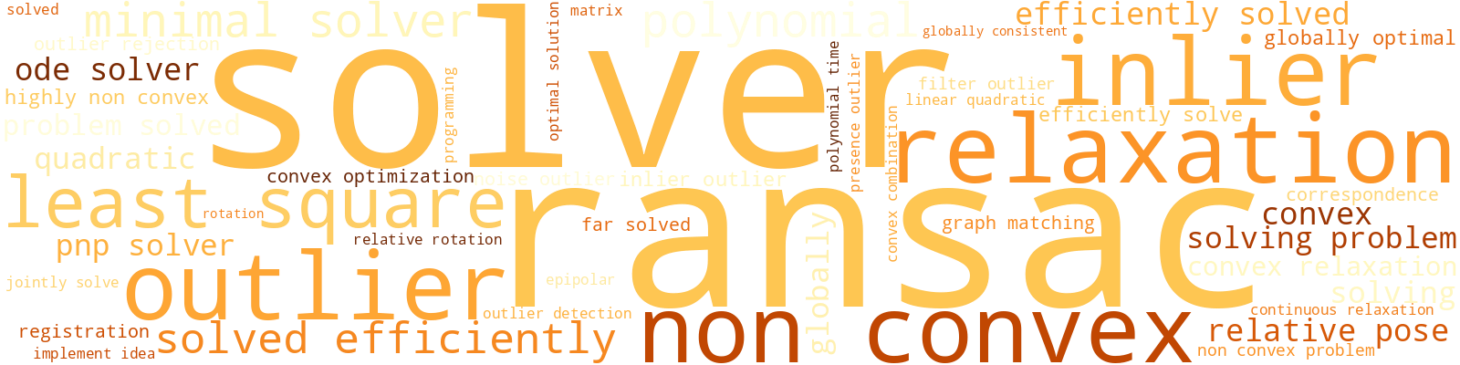

Identifying Topics